Publications

2024

-

Low Tensor Rank Learning of Neural DynamicsArthur Pellegrino, N Alex Cayco Gajic , and Angus ChadwickAdvances in Neural Information Processing Systems, 2024

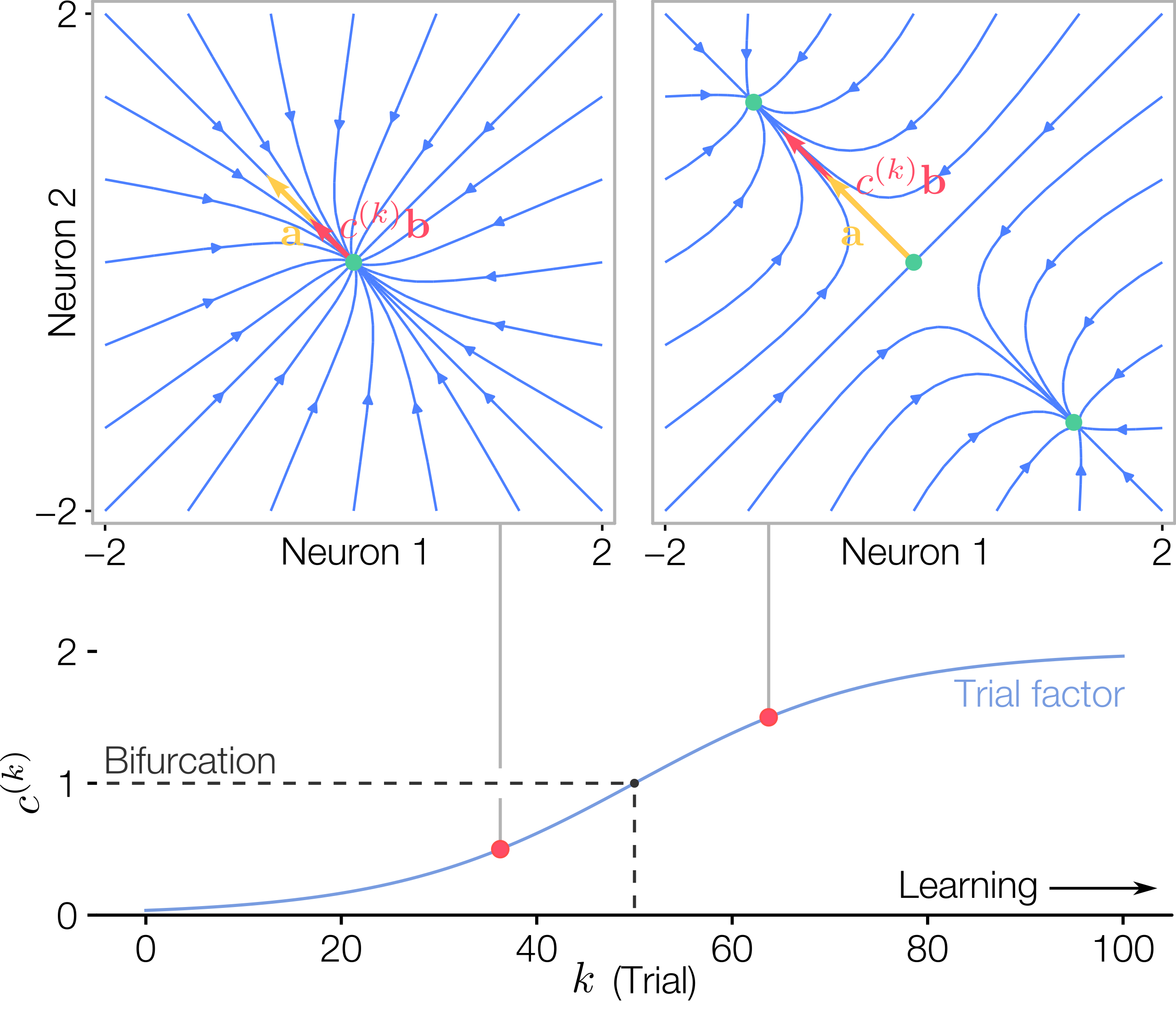

Low Tensor Rank Learning of Neural DynamicsArthur Pellegrino, N Alex Cayco Gajic , and Angus ChadwickAdvances in Neural Information Processing Systems, 2024Learning relies on coordinated synaptic changes in recurrently connected populations of neurons. Therefore, understanding the collective evolution of synaptic connectivity over learning is a key challenge in neuroscience and machine learning. In particular, recent work has shown that the weight matrices of task-trained RNNs are typically low rank, but how this low rank structure unfolds over learning is unknown. To address this, we investigate the rank of the 3-tensor formed by the weight matrices throughout learning. By fitting RNNs of varying rank to large-scale neural recordings during a motor learning task, we find that the inferred weights are low-tensor-rank and therefore evolve over a fixed low-dimensional subspace throughout the entire course of learning. We next validate the observation of low-tensor-rank learning on an RNN trained to solve the same task. Finally, we present a set of mathematical results bounding the matrix and tensor ranks of gradient descent learning dynamics which show that low-tensor-rank weights emerge naturally in RNNs trained to solve low-dimensional tasks. Taken together, our findings provide insight on the evolution of population connectivity over learning in both biological and artificial neural networks, and enable reverse engineering of learning-induced changes in recurrent dynamics from large-scale neural recordings.

@article{pellegrino2024low, title = {Low Tensor Rank Learning of Neural Dynamics}, author = {Pellegrino, Arthur and Cayco Gajic, N Alex and Chadwick, Angus}, journal = {Advances in Neural Information Processing Systems}, volume = {36}, year = {2024}, url = {https://proceedings.neurips.cc/paper_files/paper/2023/hash/27030ad2ec1d8f2c3847a64e382c30ca-Abstract-Conference.html}, }

2023

-

Disentangling Mixed Classes of Covariability in Large-Scale Neural DataArthur Pellegrino, Heike Stein , and N Alex Cayco-GajicAccepted at Nature Neuroscience, 2023

Disentangling Mixed Classes of Covariability in Large-Scale Neural DataArthur Pellegrino, Heike Stein , and N Alex Cayco-GajicAccepted at Nature Neuroscience, 2023Recent work has argued that large-scale neural recordings are often well described by patterns of co-activation across neurons. Yet, the view that neural variability is constrained to a fixed, low-dimensional subspace may overlook higher-dimensional structure, including stereotyped neural sequences or slowly evolving latent spaces. Here, we argue that task-relevant variability in neural data can also co-fluctuate over trials or time, defining distinct covariability classes that may co-occur within the same dataset. To demix these covariability classes, we develop a new unsupervised dimensionality reduction method for neural data tensors called sliceTCA. In three example datasets, including motor cortical activity during a classic reaching task in primates and recent multi-region recordings in mice, we show that sliceTCA can capture more task-relevant structure in neural data using fewer components than traditional methods. Overall, our theoretical framework extends the classic view of low-dimensional population activity by incorporating additional classes of latent variables capturing higher-dimensional structure.

@article{pellegrino2023disentangling, title = {Disentangling Mixed Classes of Covariability in Large-Scale Neural Data}, author = {Pellegrino, Arthur and Stein, Heike and Cayco-Gajic, N Alex}, journal = {Accepted at Nature Neuroscience}, year = {2023}, url = {https://www.biorxiv.org/content/10.1101/2023.03.01.530616v1}, }